FMG didn't have an objective way to measure their AI product's performance

FMG was developing many different LLM-powered applications. For example, they were automating email responses for customer support and using AI to automate compliance checks for their customer's websites. They knew this was just the beginning and they'd be developing many more AI applications in the future.

The problem they faced was that they didn't have a reliable way to measure the performance of their applications.

FMG considered building an internal tool for prompt development and AI evaluation but as they mapped out all the features they would need they realised this was a complex product to build and maintain. Their key priority was a platform that allowed them to:

- gather real-time feedback and monitor live performance

- measure performance objectively during development and detect regressions upon changes

- keep product leaders in the driving seat while facilitating seamless collaboration with engineers on AI feature development

Humanloop's evaluation tools allowed FMG to confidently accelerate product development

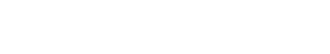

Using Humanloop's tools the FMG team can take the guesswork out of their application development. They use Humanloop's evaluators and dataset features to get objective feedback on their AI applications as they develop them. This lets them compare different prompts and models as they iterate and make decisions on how to improve their systems.

For example, they were able to finetune smaller models and show they matched the performance of GPT-4. This allowed them to make a 15x cost saving.

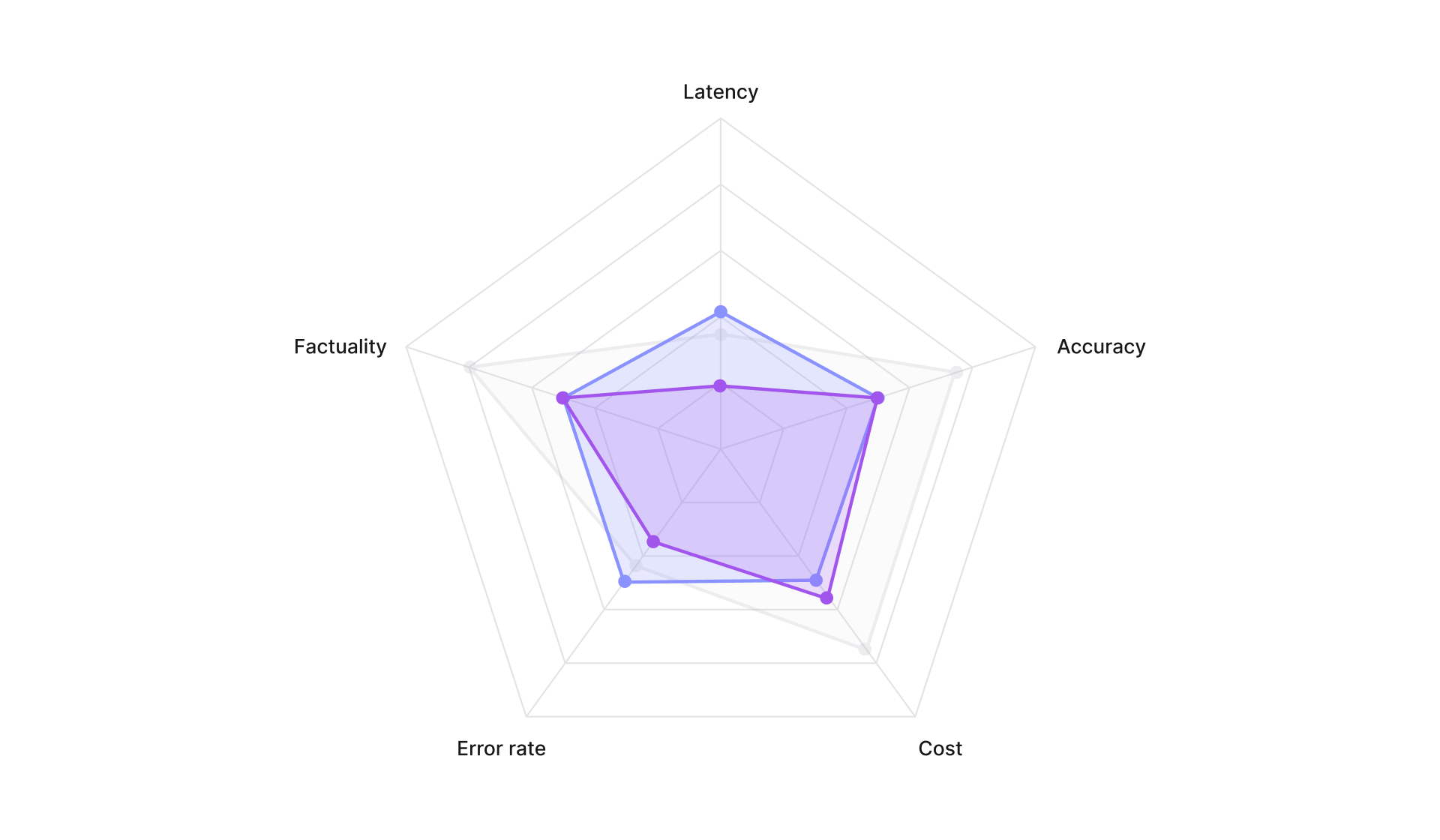

FMG's team set up a suite of different evaluators in Humanloop, ranging from simple tests such as latency thresholds to more complex rule-based checks as well as explicit and implicit human feedback from their user-facing applications.

Humanloop saves FMG hundreds of engineering hours

Were it not for Humanloop FMG say they would not have been able develop their AI features or get clients to trust in their AI applications. Tasks that would have taken hundreds of hours are now automatic.