Humanloop Tools: Connecting LLMs to resources

Today we’re announcing Tools as a part of Humanloop. Tools allow you to connect an LLM to any API to give it extra capabilities and access to private data.

From Oracles to Agents

Language models have been described as a fuzzy database, a reasoning engine or stochastic parrots. Regardless of how good or bad your take is, in practice LLMs are new type of computing platform.

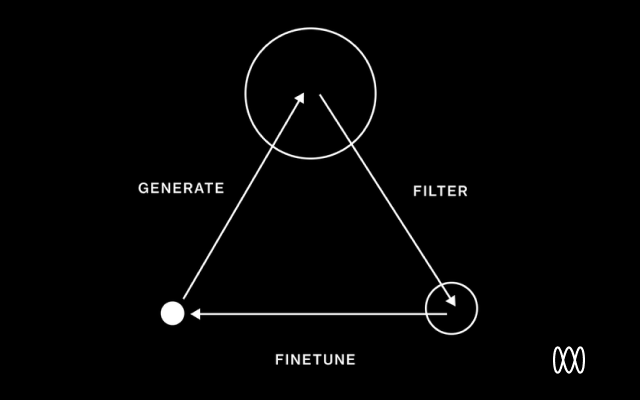

On its own, an LLM acts as an oracle: a powerful completion engine that predicts text given what’s been said so far, but ultimately a system without memory, learning or ability to act in the world. However, when an LLM is connected into a wider system it has far greater power and utility.

This is where you as a developer come in. You figure out how to connect an LLM to your system. You may add extra information into the context window through prompt stuffing, or set up a chain of events or LLMs calls to create programs that achieve a task. An exciting direction is to get the LLM to decide what should be called, and for it to act as an agent.

Humanloop helps you transform the raw LLM call into a specialised task solver by assisting you in creating a good prompt, evaluate it and then A/B test and monitor it when it’s deployed. Now, Humanloop will also help you combine the LLM with our systems through Tools.

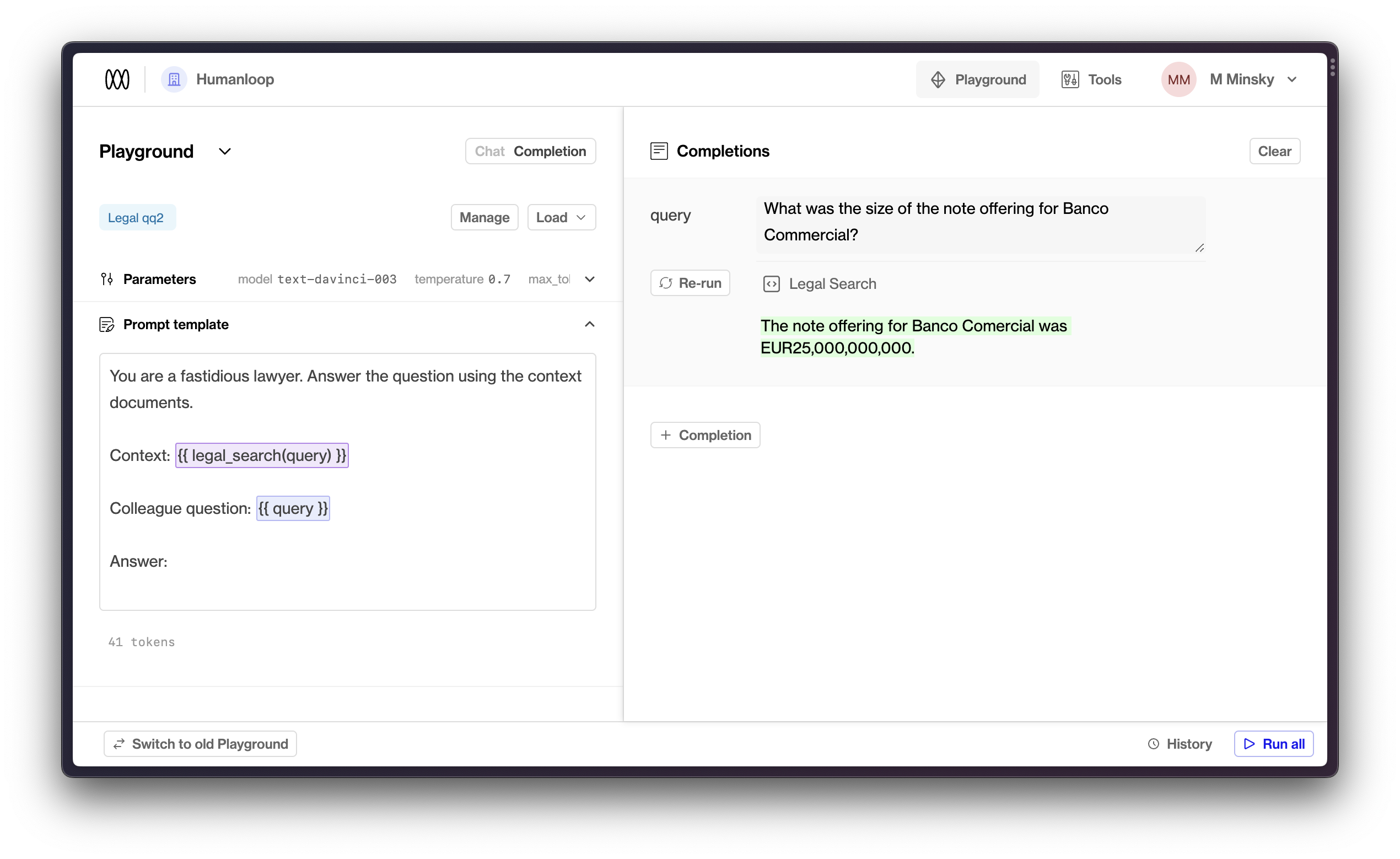

In the playground you can call Tools to add additional context into the prompt. In this case, adding in semantic search to a database of legal documents to query over.

What is a tool?

A tool is a way to connect an LLM to any API to give it extra capabilities. Simply, a tool is a function call.

You can use tools to:

- connect in your data with a vector database or otherwise

- call convenience functions like

current_date() - call tools like

calculate()which make up for certain LLM weaknesses - call other LLM functions

- give the LLM memory

- give AI agents the ability to take actions

How we’ve designed tools

A tool is a typed function that returns text. It has an added description so both humans and AI knows when it should be used.

If you have a tool call in the prompt e.g. {{ google("population of india") }}, this will get executed and replaced with the resulting text before the prompt is sent to the model. If your prompt contains an input variable {{ google(query) }} this will take the input that is passed into the LLM call. This is simple a way of “prompt stuffing” – adding more context to the model.

Of course the same thing would be possible with an input called {{ google_result}} which you feed in, so why is this helpful?

When you’re developing an AI system you have a lot of moving parts that you have to experiment with. By being able to call these functions in the playground, you are able iteratively improve how the total system works. You can debug any real examples that are problematic, by bringing them into the Humanloop playground and rerunning them with a modified prompt, or calling the tools with different parameters.

Additionally, by calling the tools within your prompts the execution of these functions to be offloaded to Humanloop. Beyond the convenience of this, the full system is track with all the associated data allowing you to debug and improve the wider system.

How to use Tools

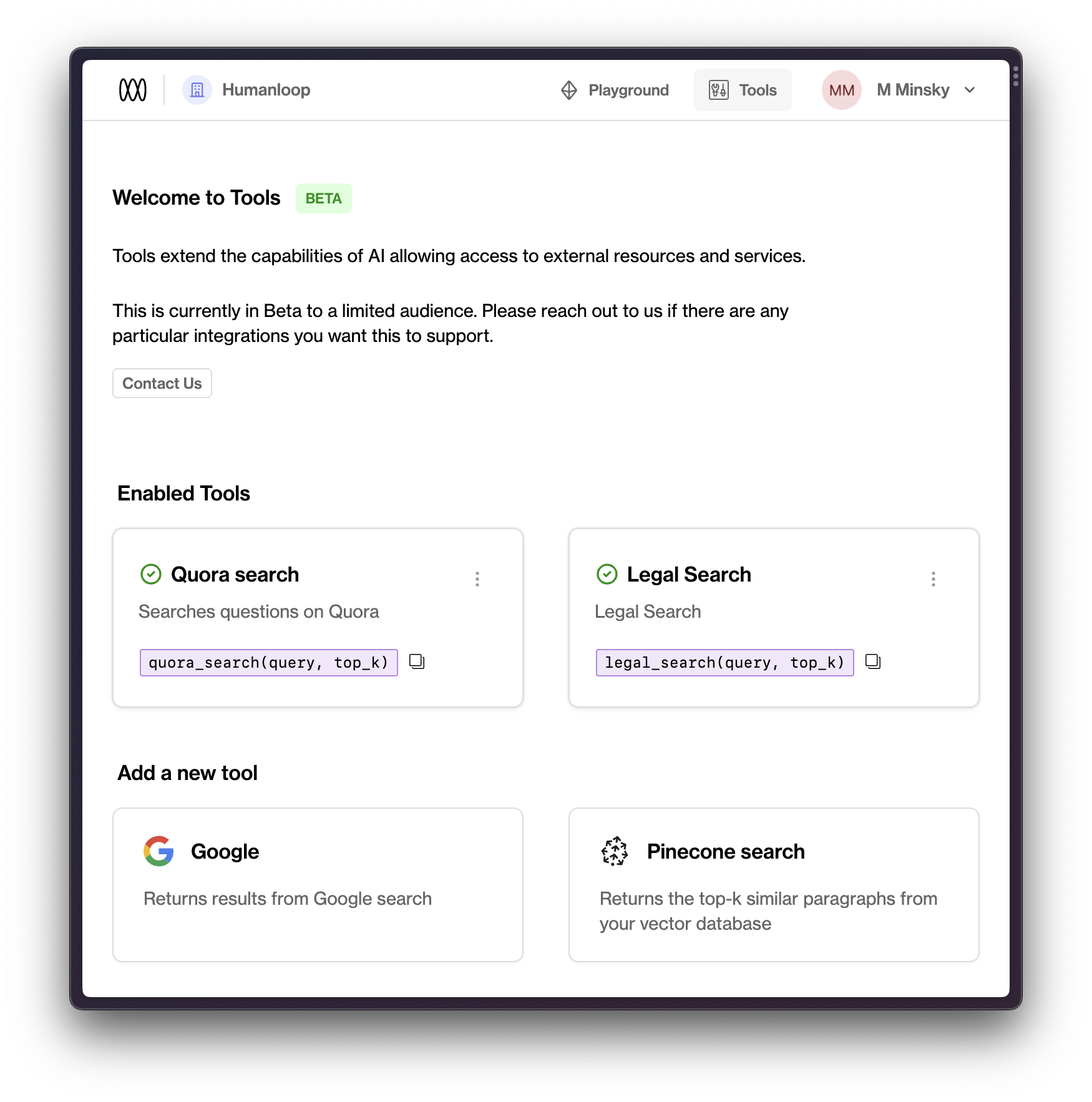

You can get started by using Tools, by clicking the Tools tab in the top right. You can connect up services like Google (via the SerpAPI) or your Pinecone database. Please give us feedback on what you’d like to enable. You can see our guide on setting up semantic search using Pinecone.

To get started with Tools click the Tools tab and set up an integration to enable the Tool.

This isn’t the final version of where we’re going with Tools. OpenAI’s GPT models now support passing in tools (they call them functions) and you can get an LLM to act as agent where it dynamically controls how and when tools will get called. We’ll have more to share on what we can enable in the future, but are excited to see what is enabled with Tools today.

About the author